Research Article - (2016) Volume 2, Issue 3

Background: Over the last two centuries, the business world has changed from an industrial world to a servicebased organizations world. Thus, the main concern of efficiency researchers shifted toward service providers, such as healthcare organizations. Until recently, most studies measuring the performance and efficiency of healthcare organizations, such as hospitals, have considered operational attributes, but did not refer to service quality factors, obtained via patient satisfaction surveys.

Objective: To investigate how quality of service, along with some other environmental variables such as teaching status, are associated with hospitals’ operational efficiency.

Methods: The study included three phases: Establishing service quality attributes, using net promoter score; reducing their dimensionality, using principal component analysis; and evaluating hospital efficiency and testing the relationships between research variables, using a two-stage procedure. The data were collected publicly from the databases of the American Hospital Association; Hospital Consumer Assessment of Healthcare Providers; Systems Patient Satisfaction Survey results and the Centers for Medicare and Medicaid Services.

Results: Using data from New Jersey hospitals, we found significant relationships (p value <0.05) between their operational efficiency and global quality of service. Regression analyses reveal that the correlation among teaching hospitals is positive, whilst among non-teaching hospitals, it is negative.

Conclusions: Amongst teaching hospitals, the higher the overall likelihood of their perceived level of global service quality index, the higher the efficiency estimate. This suggests that better managed hospitals also achieve higher patient satisfaction ratings. However, amongst non-teaching hospitals, the negative correlation implies a trade-off between service quality and efficiency.

Keywords: Data envelopment analysis (DEA); HCAHPS; Health economics; Hospital efficiency; Patient experience; Service quality; Teaching hospitals

Over the last two centuries the world has changed from an industrial world to a service-oriented commercial world. Thus, the main concern of economics and efficiency researchers shifted toward service providers, such as healthcare organizations and financial institutions [1]. During the last few decades, healthcare services have become one of the fastest growing service sectors in the global economy, due to increased growth in healthcare systems costs, especially in the US [2].

Healthcare services, particularly hospitals, are naturally extremely resource intensive; so increasing their efficiency potentially yields more savings. However, unlike other types of service organizations, the considerations taken into account by decision makers in hospitals have traditionally been influenced more by clinical and medical considerations rather than by quality issues [3]. Unfortunately, some of these considerations were “a simple medical error” [4] related to some of the decisions that had been taken; they could have been revised if hospital directors had been aware of better practices [5]. Such events triggered the need for promoting access to “high-quality care that is effective, efficient, safe, timely, patient-centered, and equitable” [6].

The call for operations research studies in the healthcare sector had already been raised about 50 years ago [7]. Subsequently, many healthcare organizations are frequently evaluated in order to streamline and increase control over processes, outcomes and efficiency, [8] and numerous studies have been conducted [9]. Most studies considered only operational attributes; the minority of studies that did include clinical quality attributes [10,11] did not refer to service quality, obtained via patient satisfaction surveys [12-14]. Quality in healthcare services is a multidimensional concept; not easily defined and even more difficult to quantify [15]. The main difficulty usually lies in the perception that healthcare organizations have actually two forms of quality: Clinical (technical) quality, focusing on the “what”, and service (functional) quality that focuses on the “how” [16,17].

These calls for research and subsequent action, triggered many studies over the years, resulting in many published papers on healthcare organizations efficiency [18]. Some of them advocated “a better performance measure of hospitals that would include both quality of care and efficiency of the process” [19]. Others used a mixture of quality attributes and operations research techniques for efficiency measurement [20]; evaluated healthcare efficiency measures [21]; or checked actual outcomes of governmental reforms in healthcare [22].

The call for research also motivated the development and operation of dozens of survey systems that could measure patient or care-giver experience [23]. The most comprehensive Patient Experience Survey in US healthcare markets, was established over a decade ago [24] and is conducted quarterly by the Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS). Its results have been used in several studies relating to patient experience. For example, Jha et al. [25] examined the relationship between several hospitals&rSQuo; key attributes, e.g., for-profit/not-for-profit, academic status and patient experience. Similarly, Isaac et al. [26] tested the relationship between clinical performance and hospitals&rSQuo; environmental characteristics, such as profit status, teaching affiliation and patient satisfaction.

However, “the effectiveness of patient experience as a performance measure is not well researched” [23]. Until recently, we have not found any published paper studying the relationships between a hospital&rSQuo;s operational efficiency and its service quality (SQ) measures. Thus, our research focuses on linking hospitals&rSQuo; SQ measures with their operational attributes outcomes; suggesting an alternative efficiency measurement model. Furthermore, SQ in healthcare services currently stands at the forefront of professional managerial attention, as it is perceived as a means of achieving better health, increasing patient satisfaction, reducing costs and gaining a better competitive advantage [27].

Analytical methods

Our study utilized a two-stage efficiency measurement procedure; the first stage involving estimation of efficiency scores, and the second stage explaining efficiency variations. The advantages of using a twostage procedure to guide public policy-makers and to assist in managerial decision-making, have already been discussed more than two decades ago [28] and have subsequently been employed in many studies [29].

For efficiency measurements, during the first stage, we used data envelopment analysis (DEA) [30]. DEA is a non-parametric, mathematical programming approach that compares the inputs and outputs of a set of homogenous decision making units, hospitals in our case, by evaluating their relative efficiency [31]. It is the most common method for hospital efficiency measurements [32]. We ran the DEA, taking into account Golany and Roll&rSQuo;s suggested workflow [33] and the proposed assumptions of Fried et al. [34].

The second stage included multivariate, Ordinary Least SQuares (OLS) regression models [35], using efficiency scores obtained in the DEA stage as a dependent variable and several SQ attributes and environmental variables as exploratory variables.

Data sources

The study utilized three public sources-the American Hospital Association (AHA) database for operational variables; the Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) patient satisfaction survey results for service quality attributes; and the Centers for Medicare and Medicaid Services (CMS) website for all other variables.

Based on the median value of the number of hospitals in survey papers, which is 55 [36], we searched the AHA database for a suitable US state as the target dataset. Ultimately, due to data availability and accuracy, the study consisted of 51 general acute-care hospitals in the state of New Jersey, all of which are owned by non-profit organizations and are all considered urban.

For each of the targeted hospitals, in considering the common types and number of variables for efficiency measurement, e.g., [37], eight attributes were selected and extracted from the AHA database. Six operational attributes represented the hospitals magnitude for the DEA stage; two organizational attributes represented the existence of an obstetrics service and the hospital&rSQuo;s teaching status, for the second stage. From the CMS website, we downloaded these hospitals&rSQuo; HCAHPS patient satisfaction survey results, including more than 15,000 aggregate responses, their case-mix and average length-of-stay figures.

Data preparation

Research variables were categorized into three groups: Operational variables, SQ attributes and environmental variables. The operational variables group included: (1) Total number of beds (BDS); (2) Total amount of personnel in terms of full-time-equivalent (FTE); (3) Hospital&rSQuo;s total expenses (T-EXP); (4) Total number of admissions (ADM); (5) Total number of outpatient visits (OPV); and (6) Total number of births (BRS)-only for hospitals with an OBStetrics service. To correctly take into account medical complexity, the variable ADM was replaced by a new variable named A-ADM (adjusted total admissions), calculated as a product of ADM and case-mix, as suggested by Ozcan [29].

The HCAHPS survey includes 22 service-related, multiple-choice questions [24]; its results are published as 10 aggregated experimental quality (EQ) attributes, grouped into three SQ topics: Composite, Individual and Global (Table 1). In order to determine the second stage SQ attributes, we adopted the principles of net promoter score (NPS) [38], as proposed by York and McCarthy [39], who questioned the pros and cons of using NPS, as a better measure for quality of service in healthcare. Then, using NPS principles, all EQ measures were translated into SQ ratings by subtracting “detractors” from “advocates”.

| Topic | Attribute | Description | Label1 |

|---|---|---|---|

| I. Composite | |||

| Nurses | Communication with the nurses | EQ1 | |

| Doctors | Communication with the doctors | EQ2 | |

| Help | Receiving help as soon as wanted | EQ3 | |

| Pain | Pain control | EQ4 | |

| Medicines | Explaining about medicines before administering | EQ5 | |

| Info | Information what to do during recovery at home | EQ8 | |

| II. Individual | |||

| Clean | Clean room and bathroom | EQ6 | |

| Quiet | Quiet in the room at night | EQ7 | |

| III. Global | |||

| Rating | Hospital overall rating, on a scale of 0 to 10 | EQ9 | |

| SQI | Willingness to recommend the hospital | ||

Table 1: HCAHPS experimental quality (EQ) attributes by topics.

Finally, to avoid possible redundancy, three new SQ variables, representing the three SQ topics were generated. We ran a principal component analysis on the first two SQ topics (Composite & Individual) to reduce and align their dimensionality, resulting in the creation of two new SQ components, named SQC1 and SQC2; we also chose EQ10 (henceforth, SQI) to represent the third topic (Global), as its correlation with EQ9 was nearly one (r=0.97).

Data analysis

Analyses of the 51-hospital input values shows that the average hospital utilized 328 beds (BDS); ranging from small hospitals having about 100 beds to very large hospitals, with about 700 beds. In terms of personnel, the average FTE is about 2,000 employees, ranging from 600 to over 7,000 employees. In terms of budget, their total expenses range from 100 million to almost 1.4 billion USD, with the median of about 250 million USD.

Regarding output volumes, average adjusted admissions (A-ADM) is about 27,000 patients, ranging from 7,000 patients in the smallest hospital, to almost 20 times as many in the largest hospital. The number of outpatient visits (OPV) is of a larger magnitude than admissions (ADM), varying from about 50,000 to almost three million per year. Distribution of teaching hospitals - 29 (57 percent) - versus nonteaching hospitals - 22 (43 percent) - indicates also that teaching hospitals are significantly (p value <0.01) larger, employ more inputs and produce more outputs, on average, than their non-teaching counterparts. Forty-five (45) of them (88 percent) have an OBStetrics service and their average number of births was about 2,000, whereas the rest are small hospitals, having less than 200 beds, and their total expenses (T-EXP) and personnel (FTE) are in the lowest quartile of the studied hospitals (Table 2).

| Variable1 | Pooled | Teaching | Non-teaching | |||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | |

| Beds (BDS) | 328 | 160 | 379 | 158 | 275 | 138 |

| Personnel (FTE) | 2,002 | 1,328 | 2,497 | 1,452 | 1,350 | 777 |

| Total Expenses, in USD, (T-EXP) | 3.24E+08 | 2.35E+08 | 4.09E+08 | 2.62E+08 | 2.12E+08 | 1.30E+08 |

| Adjusted Admissions2 (A -ADM) |

27,556 | 21,394 | 34,288 | 25,065 | 18,048 | 10,934 |

| Births (BRS)3 | 1,981 | 1,522 | 2,486 | 1,522 | 1,151 | 550 |

| Outpatient Visits (OPV) | 273,063 | 407,868 | 355,948 | 520,930 | 163,805 | 112,620 |

| Service Quality Component 1 (SQC1) | 0.549 | 0.068 | 0.552 | 0.059 | 0. 545 | 0. 080 |

| Service Quality Component 2 (SQC2) | 0.438 | 0.063 | 0.467 | 0.053 | 0. 399 | 0. 055 |

| Services Quality Index (SQI) | 0.593 | 0.112 | 0.644 | 0.093 | 0.526 | 0.101 |

| Average Length of Stay, in days (ALoS) | 5.761 | 0.681 | 5.930 | 0.587 | 5.539 | 0.743 |

Table 2: Descriptive statistics for both teaching and non-teaching hospitals (n=51).

This table summarizes the major descriptive statistics of the research variables, taking into account the hospital's teaching status.

The analysis of SQ attributes shows that the average SQI is about 0.60, ranging from the highest rating of 0.81 to the lowest rating of 0.25, while the averages of the other two SQ components, SQC1 and SQC2, are lower, about 0.55 and 0.44 respectively; ranging from the intermediate ratings of 0.65 and 0.55 to the lowest rating of 0.3 and 0.25. Additional tests show that attributes SQC2 & SQI are significantly (p value <0.001) higher amongst teaching hospitals than in nonteaching hospitals, on average, while the difference in SQC1 ratings is insignificant.

Based on recommendations by Golany and Roll [33], we omitted the variable T-EXP from the DEA model, as it showed high correlation with the two other input variables BDS & FTE, (r=0.89 and 0.96, respectively). Ultimately, the research model consisted of 13 variables (Table 3).

| Stage | Variables | |||

|---|---|---|---|---|

| Category | Name | Description | Label | |

| I. Data Envelopment Analysis (DEA) | ||||

| Input variables | BDS | The total number of beds | I1 | |

| FTE | The total number of personnel (in Full-Time Equivalence) |

I2 | ||

| Output variables | A-ADM1 | The adjusted number of total admissions | O1 | |

| BRS | The total number of births | O2 | ||

| OPV | The total number of outpatient visits | O3 | ||

| II. Regression Analysis (OLS) | ||||

| Outcome | TE2 | Technical efficiency | Y | |

| Service quality3 | SQC13 | "Nursing topics" (EQ1, EQ3, EQ4, EQ5, EQ8) | X1 | |

| SQC24 | "Individual & Docs topics"(EQ2, EQ6, EQ7) | X2 | ||

| SQI | "Willingness to Recommend a hospital"(EQ10) | X3 | ||

| Organizational | ALoS5 | Average Lengths of Stay (Days) | X4 | |

| T-EXP | Hospital's total expenses (USD) | X5 | ||

| Categorical(dummy) | OBS6 | Obstetricsservices status | X6 | |

| TEA7 | Teaching status | X7 | ||

Table 3: Final research variables and stages.

This table summarizes the final research variables, according to its stages:

Stage I: The DEA model

The average technical efficiency (ATE) of the 51 hospitals is 0.35; 50 of them (98 percent) were considered inefficient hospitals. Additional analysis using their academic status, reveals that the Mean (ATE) and variance (ANOVA) tests were statistically significant (p value <0.05 and p value <0.001, respectively), meaning that there is a significant statistical difference between teaching and the non-teaching hospitals&rSQuo; efficiency frontier.

Stage II: Regression models

The first regression model is the following multivariate OLS model:

TE = β0 +β1 ·SQC1 + β2 ·SQC2 + β3 ·SQI + β4 ·ALoS + β5 ·T-EXP + β6 ·OBS + β7 ·TEA (1)

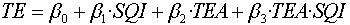

This model was established to define the relationship between the hospitals&rSQuo; efficiency scores, as an independent variable, and the exploratory variables -SQx variables, average length of stay (ALoS) and T-EXP, as dependent variables. As the outcomes of Model (1) were statistically insignificant (p value >0.2), three additional OLS regression models, using stepwise mode, were run: A multivariate model, an interaction model, and a log-based model, as a control model (Table 4).

The stepwise regression yielded only one significant (p value <0.05) variable correlated with the TE score – SQI, which implies that the two SQ components: SQC1 and SQC2, the two environmental variables: ALoS, T-EXP, and the two categorical variables: OBS and TEA, did not contribute significantly towards the explanation of the variance of TE. Thus the “best” exploratory model consists of SQI alone:

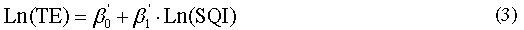

The results of Model (2) show that although the model itself was significant (p value <0.05) its R2 value was quite low (R2=0.12). Therefore, we used the following log-based control model, to evaluate Model (2) robustness:

As the control model was marginally significant (at p value =0.1), we searched for a better exploratory model. Based on Anderson, Fornell and Rust [40], the following interaction OLS model, using the teaching status, was introduced:

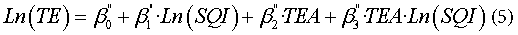

Analysis of the results of the interaction OLS regression Model (4) shows that the model and its variables are both statistically significant (p value <0.01). To support these significant outcomes, we tested the robustness of Model (4) using the following log-based control model:

Results of the control Model (5) were statistically significant (p value <0.05), as well, supporting the robustness outcome of the &lSQuo;SQIteaching affiliation interaction&rSQuo; Model 4. (Table 4)

| Predicting model | Variable | Coefficient | SE | p-value |

|---|---|---|---|---|

| 1. Multivariate regression Model (1) | 0.156# | 0.175 | NS§ | |

| Intercept | 0.437 | 0.516 | NS§ | |

| SQC1 | -0.157 | 0.555 | NS§ | |

| SQC2 | 0.240 | 0.602 | NS§ | |

| SQI | 0.332 | 0.456 | NS§ | |

| ALoS | -0.055 | 0.047 | NS§ | |

| T-EXP | 0.0001 | 0.0001 | NS§ | |

| OBS | -0.017 | 0.088 | NS§ | |

| TEA | -0.023 | 0.066 | NS§ | |

| 2. Stepwise Regression Model (2) | 0.123# | 0.167 | 0.011** | |

| Intercept | 0.024 | 0.126 | NS§ | |

| SQI | 0.550 | 0.210 | 0.011** | |

| 3. Model’s (2) Control Model (3) | 0.057# | 0.400 | 0.091* | |

| Ln (SQI) | 0.462 | 0.268 | 0.091* | |

| 4. Interaction Regression Model (4) | 0.229# | 0.160 | 0.006*** | |

| Intercept | -0.304 | 0.214 | NS§ | |

| SQI | 1.068 | 0.323 | 0.002*** | |

| TEA | 0.673 | 0.279 | 0.020** | |

| SQI×TEA | -1.187 | 0.471 | 0.015** | |

| 5. Model’s (4) Control Model (5) | 0.189# | 0.379 | 0.019** | |

| Ln (SQI) | 1.447 | 0.476 | 0.004*** | |

| TEA | -0.929 | 0.345 | 0.0098*** | |

| Ln(SQI)×TEA | -1.658 | 0.615 | 0.0089*** | |

Table 4: A summary of the five different OLS regression models outcomes.

This table summarizes the statistic parameters results of the second stage's OLS regression models run:

Predicting technical efficiency

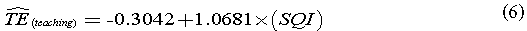

The variable SQI was found to be the sole significant variable amongst all second stage models. These results support the findings that SQI is significantly correlated with the TE of these 51 hospitals. As there is a statistical significant relationship between hospitals&rSQuo; TE scores, the overall likelihood that a hospital will be recommended (SQI) and their academic status (TEA), we propose the interaction Model (4) as a predictor for the hospital&rSQuo;s TE, using the following equations:

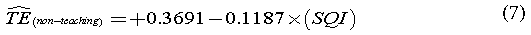

where  equals the predicted efficiency of teaching and nonteaching hospitals, respectively.

equals the predicted efficiency of teaching and nonteaching hospitals, respectively.

Analyzing Eq. (6) shows that the economic elasticity (EE) of teaching hospitals is just above a unity (+1 percent), meaning that an improvement of one percentage point in a teaching hospital&rSQuo;s SQI score yields similar growth in their TE. In that case, such improvement could save an aggregate sum of more than 100 million USD per year. Simultaneously, non-teaching hospitals&rSQuo; Eq. (7) backs up the assumed trade-off between quality and efficiency [41]. Here, the motivation of improving SQI rating contradicts the need to reduce costs and to be more efficient. However, the marginal increase of a non-teaching hospital&rSQuo;s annual expenses is estimated to be 250,000 USD per one percentage point of rating.

Positioning impact matrix (PIM)

The positioning impact matrix (PIM) is a conceptual tool, used to visualize research outcomes, based on the theory of concept mapping in healthcare [42]. This plotting (Figure 1) offers a better method for handling a hospital&rSQuo;s status and relative position, before taking operational decisions, using a clusters sketch: The dashed oval cluster in the middle represents non-teaching hospitals and solid oval on the right side represents teaching hospitals.

At a glance, the PIM tool shows insights that may take a considerable time to understand using common tools (e.g., pivot table). In our case, the PIM reflects some interesting facts about the hospital&rSQuo;s relative position, using four zones (Z1):

1. The majority (83 percent) of hospitals in Z1 are non-teaching hospitals.

2. The majority (76 percent) of teaching hospitals are in Z2.

3. Z3 is empty.

4. In Z4 there are only teaching hospitals.

Additionally, using the PIM can highlight some interesting facts about the outlying hospitals, as well:

1. P1 represents the hospital (non-teaching) with the lowest SQI value, although its inefficiency is above the median.

2. P2 represents the hospital with the highest SQI value among the non-teaching hospitals, although it has the lowest efficiency score.

3. P3 has the highest SQI value among the teaching hospitals, but it is far away (75 percent) from the efficiency frontier, represented by P4, and

4. P4 is the most efficient hospital (teaching), lying on the Paretofrontier with an efficiency score of a unity, but it has the third highest SQI value.

We believe that using the proposed PIM tool could help hospital management obtain a more focused view of their relative position and the potential impact of their decisions. For example, hospitals in Z1 may take into account the possibility of a merger with other hospitals, preferably teaching ones.

Discussion

This study deals with one of the common debates in the healthcare world: “How can we reduce costs in the health sector, while improving the quality of care and access to services?”[43]. Until recent years, most studies measuring the performance and efficiency of hospitals have usually considered operational attributes, whereas our study addresses the association between hospitals&rSQuo; service quality and efficiency.

By studying these relationships, we try to offer a better way to make operational decisions in hospitals. Our significant statistical findings show that amongst teaching hospitals, the higher the perceived level of quality, the higher their efficiency estimate (Eq. (6)). This suggests that a better managed teaching hospital also achieves higher patient satisfaction ratings. However, amongst the non-teaching hospitals, the situation is exactly the opposite: The negative correlation implies a trade-off between service quality and efficiency (Eq. (7)).

In an attempt to find supporting explanations for our results, we analyzed several research and survey papers studying teaching hospitals. The two-stage DEA studies of Grosskopf et al. [44], questioning “whether there is a significant difference in the efficiency frontier between teaching and non-teaching hospitals?”, and Harrison et al. [45], evaluating teaching hospitals&rSQuo; clinical quality and efficiency, resulted in similar conclusions, supporting the premise that a teaching hospital can improve quality of care. Similarly, Ayanian and Weissman [46] and Kupersmith [47], who screened dozens of research papers that assessed quality of care by a hospital&rSQuo;s teaching status, concluded that teaching hospitals performed significantly better than nonteaching ones.

Although these papers did not deal directly with the relationships between service quality, efficiency and teaching status, they carried out comprehensive tests regarding similar characteristics. Therefore, we believe it possible to adopt some of their conclusions to find supporting explanations for our study results, regarding the differences between teaching and non-teaching hospitals&rSQuo; efficiency and SQ ratings, as they agreed that teaching hospitals are more efficient and have better quality of care than non-teaching ones.

This paper presents and summarizes a study that attempted to find answers to some of the meaningful questions raised, both in academia and in the healthcare world, regarding hospital efficiency and service quality; we believe that our findings contribute to this debate.

We also believe that teaching hospitals&rSQuo; managers are doing their utmost to attract patients, attain the best physicians and residents, while maintaining very high-quality standards. On the other hand, the smaller scale of non-teaching hospitals may explain some of their OBServed trade-offs between SQ ratings and operational efficiency scores.

Our research did have some limitations, such as limited access to data and decision makers. Also, as we only looked at hospitals from the State of New Jersey (for methodological purposes), this obviously limits our ability to generalize our results and conclusions. For further research, we propose several options: Using a larger sample size, including SQ attributes coupled with quantitative data, such as waiting times. A different track could be studying other types of relationship, such as the relationship between academic status and the added value for hospital services, or even developing a new model for evaluating a hospital&rSQuo;s added value.